|

|

|

|

September 17, 2024

Most recently I have been working on Q+, a superscalar

processor core with vector registers and came up with the following for

implementing the RAT and register renamer.

Implementing

a RAT in an FPGA with a Circular-List Renamer

© 2024

Robert Finch

Introduction

The RAT, register alias

table, is a component in a superscalar design that tracks register mappings

between architectural logic register numbers and physical register numbers.

Mapping physical registers for logical ones is used to remove false

dependencies between instructions. The RAT typically must operate within

one processor clock cycle to avoid increasing the depth of the pipeline.

There are several components to register renaming in the CPU.

∑

The register renamer.

∑

The checkpoint

RAM.

∑

And the

checkpoint valid RAM.

The register renamer is a component that provides a physical

register name for a logical one. The checkpoint RAM stores the mappings of

physical registers for logical ones for multiple checkpoints used during

branches. The checkpoint valid RAM holds a valid bit for each physical

register for each checkpoint. During a branch miss, a checkpoint of the

register mappings and valid state is done. The checkpointing includes

copying the entire state from the current mappings.

RAT Dependency Logic

WAW, write-after-write,

and RAW, read-after-write dependencies must be detected and accounted for

when mapping registers. This typically involves priority logic to cause the

newest register mapping to be loaded into the RAT in precedence over older

ones. The prioritizing logic is used as a spatial multiplexor. Making using

of FPGA characteristics, this can be turned into a temporal prioritizer which is more hardware efficient. The

prioritizing logic can be removed, leaving only the multiplexor.

Implementation

RAMs in the FPGA are just

about as fast as logic, given that logic is implemented with RAMs. So,

given a certain logic depth allows the RAM ports to be prioritized

temporally while still meeting CPU clock requirements. In the Q+ design,

the RAT write ports are implemented by multiplexing them using a five-times

overclock of the CPU clock to provide up to four write ports per CPU clock

cycle. This takes the place of priority encoding the inputs and handles WAW

dependencies. By carefully choosing the order the ports are processed in,

newer RAT entries can overwrite older ones, so the RAT mappings always

reflect the newest association. Effectively older mappings are loaded into

the RAT, but they get overwritten by newer ones.

RAM Reduction

Temporally prioritizing

the write ports means reducing the amount of RAM required to represent the

RAT by a factor of four. In Q+ the RAT checkpoint valid RAM requires eight

write ports, the multiplex reduces this to two. A RAM with two write ports

is easily handled by duplicating the RAM and using a live-value table to

track which RAM contains the valid mapping. A side-effect of reducing the

RAM requirements significantly is that the size of the RAT is reduced which

may help the timing.

Circular List (CL) Renamer

Regarding the CL-renamer. The typical fifo

based approach allows ďperfectí allocation of registers that never stalls

the pipeline. Registers are always available from a pool of registers. For

Q+ an imperfect economy renamer is used which

uses a minimum number of LUTs. It is implemented as a simple circular list

of registers using FPGA shift registers rather than a fifo.

The register list has an available bit associated with each register. Every

clock cycle when a register name is needed the list is rotated. The

register name rotated into view may not be available, in which case the

CPUís pipeline is stalled, and the register list rotated again. Because the

oldest registers are rotated into view first, they are typically available

for allocation because they have been freed at the commit stage, long ago.

The rename list has ľ of the physical registers in it and four separate

lists are used to make rename registers available for four targets each

clock cycle. Because there are 127 registers in the list, it is 127

instruction groups before the same register comes up for allocation. In

most cases the register will be free already. The implementation is not far

enough along to measure the impact of this sort of renamer.

Renamer Overclock

The circular-list renamer (CL-renamer) is

simple enough that it has the potential to operate at a multiple of the CPU

clock rate. FPGA shift registers are extremely fast. This would allow

stalls to be avoided by skipping over registers not available for

allocation while remaining with the same CPU clock.

Software Stall Removal

Another approach to

avoiding stalls by the CL-renamer would be for

the compiler to insert MOV instructions periodically for values that are

long term. For instance, if a value is resident in a register for more than

100 instructions, then it could be moved to the same register forcing a

rename operation and releasing the old register. However, this would

require that the compiler know about the move instruction during the

optimization stage, so that the MOV does not get optimized away.

March 25, 2024

Is there a calculus of

rules? I asked this question on reddit a while ago and got a couple of

references to various forms of calculus.

https://www.reddit.com/r/calculus/comments/118qoqp/is_there_a_calculus_of_rules/

Rather than post my

notion on reddit again. I thought I would just put it on my webpage.

Basically, the idea comes from the fact that there are rules and then more

general rules to express mathematics. If there are rules, and more general

rules, is it possible to graduate between the rules at a fine level, as is

done for other forms of calculus?

Just thinking about the

calculus of rules some more, and noting that

irrational numbers are defined by rules. The difference between two

irrational numbers can be taken and might be used to establish a set of

rules in between. So, take the difference between pi and e for instance.

Where fpi() is the rule determining pi and fe() is the rule

determining e. Fpi() Ė Fe() = fpime(). Fe() plus this difference fpime() in rules would

be equal to fpi(). One could differentiate the rule to infinity by

taking the limit as the number of differences in rule approaches zero.

Limit: Fpi()-Fe() / n

where n goes to infinity. I think that would result in a continuous a set

of rules between e and pi. <- I think this may just be a calculus of

variances though, not of rule.

December 15, 2023

Started working on a new CPU core in November called Qupls or Q+. It is a four-way out-of-order superscalar

core taking a different approach than the previous Thor cores. While being

four-way and having a 32-entry ROB, the core is smaller than the two-way

Thor core. The core supports a 64-entry general purpose register file.

Instructions are placed in blocks and processed in groups of four. More

documentation for the core can be found in github

at: https://github.com/robfinch/Qupls/tree/main/doc

Also updated the website to remove advertising. It was

not generating any revenue and maintaining it is a pita.

|

|

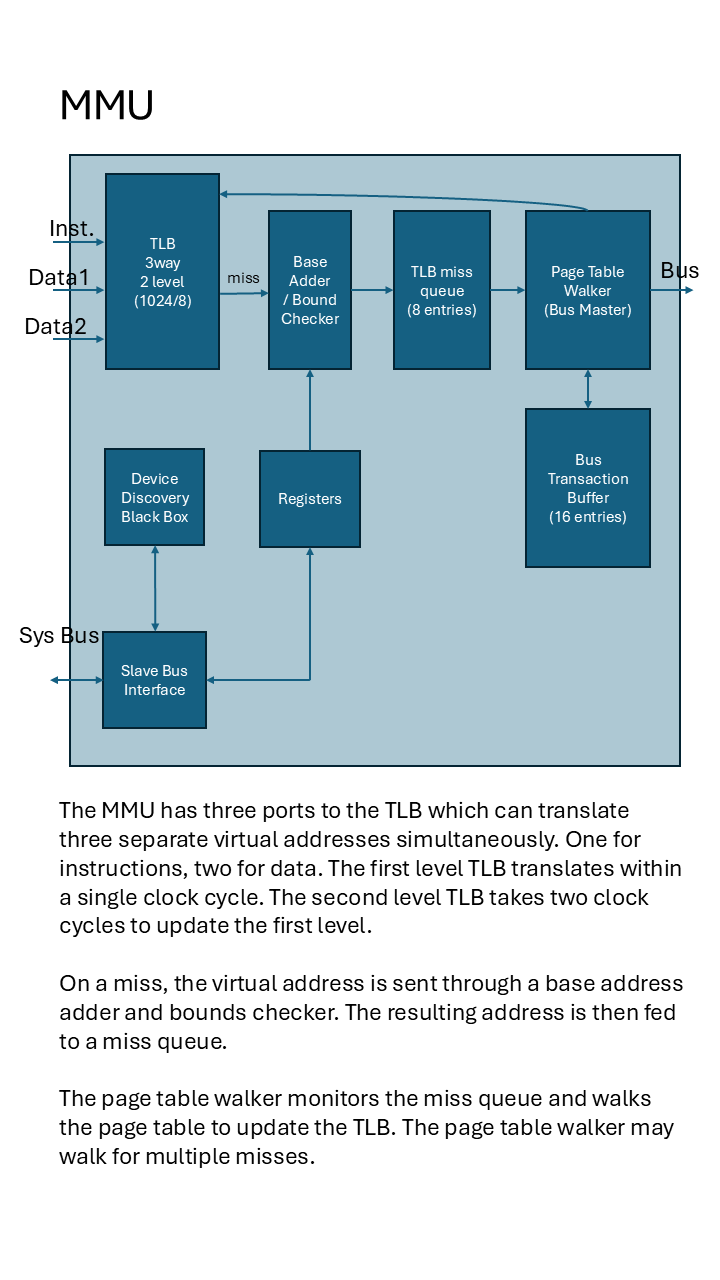

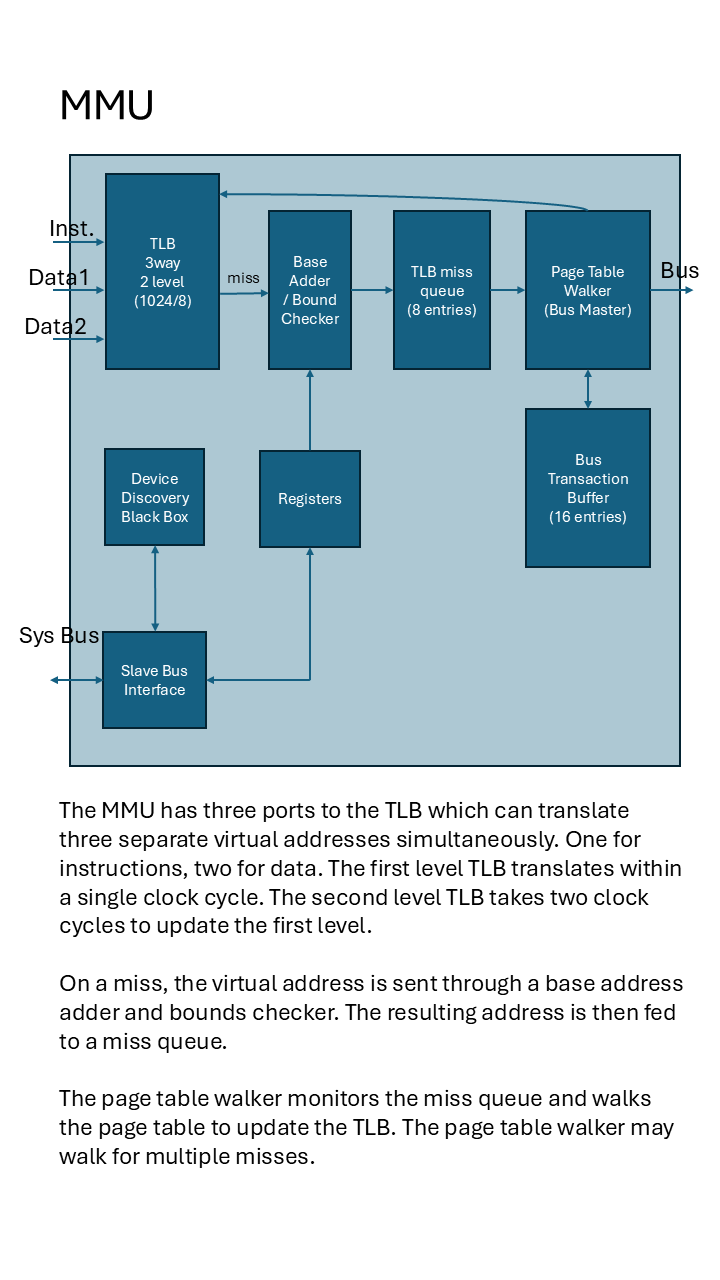

March 20, 2022

I have been working on Thor2022 since January. Reduced

the number of registers from Thor2021 to 32 regs from 64 regs and modified

a few of the instructions. Many of the opcodes remain the same. My most

recent work has been on a hash table for virtual memory. The hash table is

implemented entirely with block RAM using about 1/3 of the RAM in the FPGA.

The virtual memory pages are 64kB in size which means the translation table

is reasonably small for the 512MB RAM on the board. There are 16384 entries

allowed for in the hash table which are grouped together into groups of

eight. So, there are 2048 groups of entries. A hash of the virtual address

selects a group of entries. Then all eight entries are searched in parallel

for a translation match. Given the large size of a page, I came up with a

way of using 1kB sections of the page. Multiple virtual addresses may map

to different sections in the same physical page. It has kept me busy for a

while.

|

|

December 7, 2021

|

|

Most recently I have been working on the Thor processing

core version 2021. I post in a blog style almost daily at anycpu.org. Thor

is another 64-bit processor. The ISA features support for vector

operations. Instructions are 16,32,48 or 64 bits, but most instructions are

48-bit. 64 GPRs. The project is located in github.

|

|

|

|

April 13, 2020

Added a chapter from a book Iíve been working on to the

flash fiction section of the website. Lately Iíve been delving into the

format of numbers in particular the posit number

format which promises higher accuracy and greater dynamic range than

regular floating-point numbers.

|

|

|

|

February 27, 2020

|

|

Iíve been browsing the S100Computers.com site. Wanting to

expand the FPGA computer into multiple boards I was searching for a

suitable bus standard to connect the boards. Several bus standards come to

mind. PCI Express being a more recent bus. I like the high-speed serial

nature of PCI Express, it makes a lot of sense to save pin counts and

offers great performance as well. It is however somewhat of a closed bus

standard. Other bus standards that come to mind are PCI, ISA, NuBus, ECB bus. I studied them all. They all have

features I like and dislike. Iíd like to see a bus with 48-bit addressing

for instance. Itís possible to modify the bus standards slightly to get

48-bit addressing. Iím leaning towards developing my own bus standard for

hobby use. I want prototype boards to be small, several of the bus

standards have connectors that are too large. Iíve sketched out a

high-speed serial bus that uses HDMI transceivers. Not as fast as PCI

Express but hopefully still useful. The interesting thing about using HDMI

is the clock and signal recovery capabilities. using differential pair

signalling (TMDS) should help with noise immunity. Just a thought for now.

|

|

February 9, 2020

Most recently working on the Petajon

project, a 64-bit machine with the intention of debugging the Femtiki operating system. Not so recently created a

32-bit version of the 6522, via6522 to go along with the uart6551. As usual

the cores are under my Github account.

|

|

|

|

July 15, 2019

The most recent update of the website is a reference to

the uart6551 core on the 6502 page. The uart core is 32-bit with the low order eight bits

register compatible with a 6551. It features fifoís

(not present on a 6551) and extended baud rate selection.

|

|

June 8, 2019

I spent some time researching and coming up with versions

of a reciprocal square root estimate. The estimate is used in computer

graphics in particular for shading effects. One

implementation for reciprocal square root was used in the Quake game. I

coded a pretty direct version of this algorithm in Verilog using

floating-point components previously developed for multiply and

add/subtract. Then I created a second version using a state machine to

eliminate a couple of the multipliers by using them sequentially. Finally,

I came up with a table version of the reciprocal square root estimate

thatís not based on the algorithm. The table lookup version is the smallest

and fastest core, itís also the least accurate. The table lookup version is

accurate to better than 3%. All three versions were placed in the same file

and selectable via constant definitions. The code for the core is available

on my Github account under the rtfItanium core, floating point unit.

The table lookup version makes use of two tables and

conditional logic which forces the output to infinity for suitably small

values of input. Itís interesting because a 16k entry table was compressed

down to 8k entries by noting where values were infinite. At entry 8129 of

16384 and beyond values become meaningful. 64 entries before 8192 are

stored in a separate small table (68 entries), then entries 8192 to 16383

are stored in an 8192-entry table. What is stored? The tables can be

compressed by noting that the estimate wonít be accurate to more than six

bits because thatís all the mantissa bits that can be used to index into

the table. So, the table only stores nine bits of the mantissa of the

estimate. Seven bits of the table are used to store the exponent of the

estimate. In almost all case only seven bits of the exponent need to be

stored because the top bit is set to zero by the square root operation. The

cases where this isnít true are fit into the smaller 68 entry lookup table.

|

|

June 3, 2019

Itís been a while since I updated the

site. Iíve been busy working on the FT64 and then the rtfItanium.

The rtfItanium is a three-way superscalar core

capable of executing up to three instructions at a time. In some ways itís

simpler than the FT64 though. It makes use of 40-bit instructions in a

128-bit bundle with an 8-bit template. The instruction decoder is simpler than

in FT64. The source code for the core is located under my github account.

|

|

|

|

|

|

October 8, 2018

The Goldschmidt divider is fast and

reasonable to implement. It makes use of two multipliers and a shift

register. The Goldschmidt divider is described adequately in several

different webpages. A key to getting the divider to work well is to choose

the first factor carefully.

The FT64 project is coming along

nicely. A test system has been built and loaded into an FPGA. Itís still a

challenge to get everything working. The test system includes a GPU which

executes a subset of the FT64 instruction set.

|

|

March 12, 2018

|

|

Most recently

I've been working on a project to emulate the 6567 chip

using an FPGA and verilog code. The project is

called FAL6567 and project outputs in my Github

account. Part of the project is a 65816 based low power computer.

Slightly less recently the FT64 project was born. In it's current rendition it's a two-way super-barrel

processor with 32 hardware threads. FT64 uses a 36 bit

instruction encoding. Also on Github.

|

|

|

October 13,

2017

|

|

I've stayed

away from the tech side of things for a couple of months now. It's good to

take a break from whatever you're doing once in a while.

I've been busy playing video games, setting up a stock trading account, and

doing illustrations for a book. The most recently added flash fiction is a

story called "Birth of a Mutant". Nothing like a home-made

nuclear reactor to warm up those cold winter nights. Like most of my

stories it's a mash of real and fictional events.

|

|

|

June 30, 2017

|

|

Most recently

I've been experimenting with grid computers. FPGA's

are large enough to support multiple processing cores. The first grid

computer was made from multiple (56) Butterfly16 cores. Each node in the

grid has access to 8kB ram and rom along with a router. The grid computer

doesn't do much at the moment besides display the

results of ping operations to other nodes from the master.

|

|

|

January 8, 2017

|

|

Added an

updated PSG32 (programmable sound generator or sound interface device) to

the audio cores section. It uses 32 bit frequency

accumulators rather than 24 bit to allow a higher

range of input clock frequencies to be used. Registers are now 32 bits

wide. A couple of new features have been added to the core including FM

synthesis and reverse sawtooth waveforms.

|

|

|

December 13,

2016

|

|

I've moved back

to playing with "firm" ware. My most recent endeavour is the DSD7

(Dark Star * Dragon Seven) core. It's a 32 bit

core with 80 bit extended double precision

arithmetic. I really wanted the core to help validate an FPU. It seems to

work okay. For the next core I'd like it to support the 80

bit format and one thought is to just make the entire core 80 bits.

There's a lot of problems dealing with 80 bit

quantities when memory is often a power of two in size (32/64/128 bits).

For DSD7 the problem was delt with by using triple precision (96 bits) for

a storage format even though the hardware only supports 80 bits. Using 96

bits rather than 80 bits had the benefit of keeping the stack word aligned.

Why not just use 64 bit double precision

? Sometimes it doesn't have enough precision. It's only about 16

digits. Suppose you want to work with numbers accurate to six decimal

places, that leaves only 10 digits to the left of the decimal available. In

some circumstances that isn't quite enough. 80 bit

precision gives a few extra digits. One thought I have for an FPGA based

FPU is to use 88 bit precision. The multipliers in

the FPGA can produce efficient 72 bit results

which would be good for the mantissa. That's about 21 digits. 72 bit mantissa + 15 bit exponent + 1 sign bit is 88

bits. A larger exponent isn't really needed, the 128 bit

IEEE format uses only a 15 bit exponent. Going

with more mantissa bits in an FPGA uses resources less efficiently.

|

|

|

October 16,

2016

|

|

The latest batch

of work has been on a simple .MNG file viewer. It is capable of viewing MNG

files in the simplest format. Finray has been

extended with the ability to loop back and parse multiple frames of

information. From this it can generate simple animation. It stores

sequences of PNG bitmaps which can then be loaded with FNG (The MNG file

viewer) and turned into simple MNG files.

|

|

|

April 27, 2016

|

|

For the past

couple of weeks bitmap controllers have been on my mind. It's amazing how

something fundamentally simple can get to be fairly

complex. The basic operation can be summed up in a single line. A

bitmap controller reads through memory in a linear fashion and outputs to a

display. However once you throw in options to

support multiple display resolutions and color depths things start to get

complex. For the latest bitmap controller added on top of simple display

capability is pixel plotting and fetching. Pixel plot / fetch is a

reasonable operation to perform in a bitmap contoller

as bus aribtration for memory is already present.

Depending on the color depth pixels may fit unevenly into memory locations.

This can result in complicated software to fetch or store a pixel. Software

can be made simpler by provided a hardware pixel plot and fetch.

|

|

|

March 08, 2016

|

|

I've been

experimenting with ray-tracing and come up with a

"simple" ray-tracing program. The program uses a ray-tracing

script file (.finray) to

generate images. The script language supports generation of random vectors

so that random colors and positions may be used. It also supports composite

objects and repeat blocks. The display of a group of object

may be repeated a number of time. The image below

shows some sample output.

|

|

|

|

March 03, 2016

|

|

I took a break

from FPGA cpu's for a bit to develop some games.

I created a rendition of the venerable asteroids game. It's available for

download in the software directory.

|

|

|

Jan 09, 2016

|

|

I've been

experimenting with error-correction for the memory components of the latest

system. I found a bad bit in the host system and the way to work around it

was to use error correcting memory components. The diagram below shows the

error correction associated with DRAM memory. It stores an eight bit byte plus five syndrome bits in a sixteen bit memory cell. The reason I chose to error

correct on a byte basis rather than a word basis is that correcting on a

byte basis doesn't require implementation of read-modify-write cycles.

Once

error checking is included there is some justification for using bytes

larger than eight bits in size. A five bit

syndrome can provide error correction information for up to eleven data

bits not just eight bits. Using eleven bit bytes

plus five bits for error checking it would fit nicely into 16 bits. One

would likely be using a 16 bit path to store an eight bit byte plus five bit

syndrome to memory. So why not use all the bits and go with eleven bit bytes instead ?

|

|

|

October 24,

2015

|

|

Most recently

I've been working on porting Fig Forth 6502 to the RTF6809 and converting

it to use 32 bit Forth words. It doesn't quite

work yet, but it's close. Forth is an interpretive computer language. I

hope it to be able to make use of the RTF6809's 32 bit

address space. The work is posted on my github

account.

|

|

|

June 25, 2015

|

|

I've started

yet another FPGA processor project called Dark Star Dragon One (D.S.D.1).

Featuring variable length oriented instructions,

segment registers, branch registers, and multiple condition code registers.

Yes this does mean I'm shelving the FISA64 project

for now.

The author is of the opinion that any serious processor

will have variable length instructions, the improvement in code density and

cache usage is just too great to avoid. 16 bit

instruction were added to FISA64 and improved code

density by about 20%. Having an inherently variable length architecture

should improve things even more.

Segment registers do get used in general purpose

applications. DSD1 will be reusing some of the segmentation model from the

Table888 project.

The branch register set is really just

a collection of registers that are specially defined in most instruction

sets. This set includes the program counter, exceptioned

program counter, return address register and others. In this design they

are given their own explicit register array.

|

|

|

April 21, 2015

|

|

FISA64 is

continuing to occupy my time. I've been posting about it frequently in BLog style at anycpu.org. Yesterday's work was on the

compiler try/catch mechanism and getting CTRL-C events to be handed to

tasks. In the past month I've written a system emulator for the FISA64 test

system and have been using it to test out software. I added then removed

bounds registers from the processor design, then added a simpler check

(CHK) instruction instead.

|

|

|

March 15, 2015

|

|

Tonight's quandary is a design decision that leaves the

same FISA64 branch instruction branching to one of two different locations

depending on whether or not it's predicted taken. FISA64

makes use of immediate prefixes to extend immediate values beyond a 15 bit limit set in the instruction.

Branch instructions canít make proper use of an immediate

prefix because they donít detect an immediate prefix at the IF stage in order to keep the hardware simpler. (There is no

requirement for conditional branching more than 15 bits). However a branch instruction just uses the same

immediate value that is calculated for other instructions in the EX stage. This could lead to branches branching to two

different locations if an immediate prefix is used for a branch.

For example if a prefix is used

with a branch, BEQ *+$100010 for instance (the $100000 displacement would

require a prefix). Then the branch will branch to *+$10 if it is predicted

taken (ignoring the prefix), but to *+100010 if itís predicted not taken,

then taken later in the EX stage.

If the branch is predicted taken, itíll branch

using the 15 displacement field from the instruction.

If the branch is predicted not taken, but is taken later in the EX stage, itíll branch using the full immediate value,

which with prefixes could be up to 64 bits. The solution is that the

assembler never outputs branches with prefixes. There is no hardware

protection against using an immediate prefix with a branch.

In the IF stage ,rather than

look at the previous instructions for an immediate prefix, the processor

simply ignores the fact a prefix is present, and sign extends the branch

displacement in the instruction without taking into

account a prefix.

IF stage:

if (iopcode==`Bcc && predict_taken) begin

pc <= pc + {{47{insn[31]}},insn[31:17],2'b00};

// Ignores potential immediate prefix

dbranch_taken <= TRUE;

end

However, the EX stage uses a

full immediate including any prefix, also to simplify hardware.

EX stage:

`Bcc: if (takb

& !xbranch_taken)

update_pc(xpc + {imm,2'b00}); // This uses a ďfullĒ immediate value

|

|

|

December 28,

2014

|

|

Addressing

modes in a modern processor are boring. For the typical RISC processor only

a single address mode is supported because it's the minimum needed. That

address mode is register indirect with displacement. A register is added to

a displacement to form the memory address. Sometimes indexed addressing

using two registers is also supported. Few new processors have available

memory indirect addressing modes. The plethora of addressing modes on an

older processor like the 680x0 series made the processor interesting. The

key benefit to memory indirect addressing modes is that it allows pointers

stored in memory to be larger than the size of a register. This is put to

good use in the 6502 processor. In the latest ISA FISA64 memory indirect

address modes are available to experiment with. a 128 bit

address space is supported using memory indirect address modes.

|

|

|

December 12,

2014

|

|

Tonight's

lesson is one about clock gating. When a clock is gated

it introduces a buffer delay to that clock tree. If the ungated version of

the clock is also being used, the buffer delay in the ungated version needs

to be matched with that of the gated clock. Otherwise

if the buffer delay isn't matched the P&R tools may have a heck of time

trying to meet timing requirements.

|

|

|

December 10,

2014

|

|

IEEE standard

for floating point isn't the simplest thing to get working, or so I'm

finding out. I've spent some time recently working with floating point

units both standard and non-standard. One can do a lot of computing without

floating point. Many early micro-processors didn't support floating point

at all. How to incorporate floating point into an older system using an eight bit micro came to mind. FT816Float is a memory

mapped floating point device oriented towards byte

oriented processing. It's a bit non-standard and makes use of a

two's complement mantissa rather than a sign-magnitude one.

|

|

|

November 7,2014

|

|

Yet another ISA

is born this past week. FISA64 is a 64 bit ISA

that attempts to overcome the shortcomings run into with the Scarerob-V ISA. Rather than having a segmentation model

that works automatically behind the scenes, the FISA64 ISA requires

"manual" manipulation of the segment registers. This is possible

by supporting two modes of operation: kernel and application. In kernel

mode the address space is a flat unsegmented one. This allows the segment

values to be manipulated without affecting the processor's addressing. The

segmentation model supports up to a 128 bit

address. The processor does not support a paging system.

|

|

|

November 3,

2014

|

|

I spent the

past week or so working on a new ISA. Well I

synthesised an implementation of it, and it's too big. Too big at (122 %)

the size of the FPGA. It's a shame because it had a nice segmentation and

protection model, similar to x86 series. Projects

tend to get bigger with bug fixes, so there's no way to shoehorn it into

the FPGA. So for now it's

another project that's being shelved. Time to get back to a basic simple 32 bit ISA. Why not RISC-V ?

I'm not overly fond of the ISA layout and the branch model. There's also fewer instructions than I like to see in

the base model. Sure the ISA can be extended with

brownfield or greenfield extensions but then there's the issue of

compatibility. If one is going to go to the trouble of extending the ISA

and developing toolset changes to support the extended ISA, why not just

start one's own ISA ? One wants to use an existing

ISA to leverage the use of the ISA's toolset.

|

|

|

October 31,

2014

|

|

Scarey Halloween. They're back. The nightmare of segment

registers. I wasn't going to include them in the latest ISA design, but

I've changed my mind after reading up on how they are used in a modern OS.

Normally segment registers (CS, DS, SS) are initialized to zero and left

alone. However other segment registers (FS, GS) can be used like an

additional index register in an instruction to quickly point to thread

local storage and global storage areas. So I've

added segment base registers to be used in this fashion to the latest ISA

design. The latest ISA in the works is called Scarerob-V

given that it's halloween, and other recent

events. Scarerob-V ISA makes use of variable

length instructions which are much shorter than those of Table888.

|

|

|

October 22,

2014

|

|

The RISC-V ISA

(riscv.org) has a lot going for it.

Variable length instructions, extensibility with 32/64/ and 128 bit versions. A simple base ISA and a number of standard extensions. It seems to be one

worth studying and I've spent some time studying this recently. It's become

an implementation project on my todo list. The

RISC-V ISA is an ISA that attempts to please all. It'll be interesting to

see how well it works in practice.

|

|

|

October 4, 2014

|

|

A couple of

Flash-Fiction stories have been added recently to the website. A page for character

descriptions has also been added. The Finitron

verse is slowly expanding.

|

|

|

August 19, 2014

|

|

Back to the

drawing board. I've started working on yet another soft processor core,

expanding my toybox furthur. The instruction set

will be similar to Table888's. Support for a

segmentation model is not going to be provided. Also dropped is index

scaling on the indexed addressing mode. The new core will stay with a 40 bit fixed size opcode, and 256 registers.

|

|

|

July 15, 2014

|

|

I've taken a

break from my normal HDL artistry to work on a piece of software that

generates artificial maps. The basic map generator is based on something

called a Veronoi fracture map. The fracture map

simulates lumps of matter composing the planet. Previously the map

generator was based on a fractal generator which generated nice looking maps but they weren't very realistic (it placed

mountains in the centre of continents). Now mountains are along the coast

and where there is extreme difference in elevation, more in line with

reality.

|

|

|

|

July 10, 2014

|

|

Learning more

about the .ELF file format and how to link object files together was the

order of the day. .ELF files are a popular standard file format used to

represent executable and relocatable files. I was looking at the extended

ELF64 file format developed by HP/Intel with the intent of supporting the

format for the Table888 project. The A64 assembler can output .ELF files in

addition to binary and listing files. In theory the L64 linker can link together .ELF relocatable files produced by the

assembler. It's the first time I ever wrote a linker, and there's still a

couple of issues to resolve with it.

|

|

|

July 01, 2014

|

|

Got hung up on mneumonics. The compiler called the exclusive or

function XOR and the assembler recognized only EOR. A quick fix to the

assembler allows it to recognize XOR as well as EOR as the same

instruction. I can never make up my mind on that one, so I'll just support

it both ways. All kinds of different mnemonics are used to represent

essentially the same instructions in different assembly languages. Is that

ADDC the same as the ADC in another instruction set ?

One has to research carefully sometimes while

working with assembler code. Is SED set the

decimal mode or set the direction flag ?

|

|

|

June 21, 2014

|

|

I needed

something small and simple to test the C64 compiler with and I needed some

sort of file system available for my system. Luckily

I found ChaN's FatFs which

fits the bill. ChaN's system provides all the

basics for a FAT file system operating in an embedded system. All one needs

to do is to supply a few interface routines to the low

level disk access. I've been busy working towards a simple SD Card

access system. My current goal is to be able to load and run a file from

the card. After a few compiler fixes I've got as

far as being able to display a directory. It's a slow

going circus dance.

|

|

|

June 12, 2014

|

|

FPP (Finch's

macro pre-processor) has been updated with some bug fixes. It's undergoing

testing by compiling the MINIX system. The fixes include an operator

precedence problem fix and a macro expansion bug fix. The pre-processor was

originally written in 1992 so it's now 22 years old. Recent work has been

on the C64 compiler, modifying it to support the Table888 processor.

|

|

|

June 8, 2014

|

|

Tonight's

escape is clock throttling. Clock throttling or controlling the clock rate

can be used to control power consumption. The lower the clock frequency is,

the less power is used. Power as we all know is physically proportional to

frequency. Being able to control power consumption is one place where a

gated clock might be used. Generally speaking

gating clocks is not a good idea but occasionally it is done. Fortunately the FPGA vendor provides a clock gate

specifically for handling gated clocks. Incorporated into Table888 (the

latest processor work) is a clock gating register. This register is filled

with a pattern that controls the clock gate, for power control.

|

|

|

June 3, 2014

|

|

NOP Ramps are

my latest craze. |n order to avoid really complicated

hardware, the concept of NOP ramps can be used. I'm talking about what

happens when instructions cross page boundaries in a system with memory

management. The problem with instructions spanning page boundaries is

classic. If there is memory management page miss, the instruction needs to

be re-executed once the missing page is brought into memory. In order to ensure proper operation both the missing

page and the previous page need to be in memory. Re-executing instructions

can be a non-trivial problem. Fortunately what I'm

working on only has a handful of instructions that can cross page

boundaries. Rather than attempt to re-execute the instructions, the

assembler just forces the instruction into the next page of memory by

inserting NOP instructions. Hence it's the NOP

instructions that span the page boundary. If there is a need to re-execute

them, then it is trivial to do so. NOP ramp example:

|

00008FF0

41 F8 2A 90 00

bne

fl0,kbdi2

00008FF5

16 01 24 00 00

ldi

r1,#36

00008FFA EA EA

EA EA EA

; imm 00008FFA EA EA

EA EA EA

; imm

00009000

EA EA EA EA EA

00009005

FD 70 FF 03 10

0000900A

A0 00 01 00 18

sb r1,LEDS

|

|

|

|

May 15, 2014

|

|

One can write a

lot of code using just three registers if one codes in assembly language

and is careful. With just a few registers to work with, a byte-code

processor can offer high code density. This is great for microcontroller

type applications where memory space may be constrained. What if one wants

more registers in order to support a compiled language ? RISC processors were originally designed for

high performance with compiled languages. The typical RISC processor uses a

fixed size instruction format. Unfortunately, one size does not fit all

instructions, and the result is that code density for the typical RISC

style suffers. To improve code density one can

look at the typical operation performed and encode them in as few bits as

possible. Allowing the size of instructions to vary in a design based on a

RISC processor, results in a kind of hybrid processor; the worst of both

worlds. Lower code density and higher complexity. Unfortunately

processors become complex anyways when they have to

support legacy systems. Trends for currently popular architectures include

variable instruction sizes (ARM, INTEL) and flags registers (ARM, INTEL,

SPARC). If one removes the limitations of a fixed size instruction set, one

can optimize instructions for code density. It's amazing how adequate a

branch instruction composed of an eight bit

opcode, and an eight bit displacement is. This sixteen bit instruction covers about 90+% of the cases

where a branch would be used. The RTF65003 strives to have a good mix of

legacy support, while adding additional registers and increasing the

addressing space. It is necessarily more complex than an

new design.

|

|

|

May 14, 2014

|

|

What's better

than the RTF65002 ? - The RTF65003. There are

several things I don't like about the RTF65002 so

I started working on a better version. One item is the branch target

address. In native mode on the RTF65002 the target address is computed

relative to the address of the instruction; this is different than the '02

and '816 where the address is computed relative to the address of the next

instruction. The '003 follows the convention set by the '02. Another issue

is the different code and data addresses of the RTF65002. The RTF65002 is a

word addressed machine for most data operations and this makes it difficult

to use with a compiled language like 'C'. I decided before putting a lot

more work into porting software, to create an improved version of the

processor. The RTF65003 has byte addressable memory operations, and greater

support for different operand sizes. Byte (8 - bit) or character (16 -bit)

prefix codes can be applied for memory operations to override the default

of a word sized operation. Prefix codes are used to modify the behaviour of

following instructions rather than creating a whole bunch of rarely used

instructions.

|

|

|

May 12, 2014

|

|

Compiled code

for the RTF65002 generated signed multiply instructions which hadn't been

added to the processor. Two possible solutions were to either change the

compiler so that it generated code to perform sign adjustments or modify

the processor to include signed multiplies. Thinking that adding signed

multiplies to the processor would generate too much additional overhead,

they had been left out; I decided to try adding them. Well, lo-and-behold

adding the functionality made the processor smaller and faster (by about

5%!). I guess adding the opcodes simplified the instruction decoder.

Encouraged by this good fortune I decided to try adding signed division and

modulus operations as well. Doing this resulted in almost no impact to the

size or speed of the processor. So the RTF65002

now supports both signed and unsigned multiply / divide / modulus

operations.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|